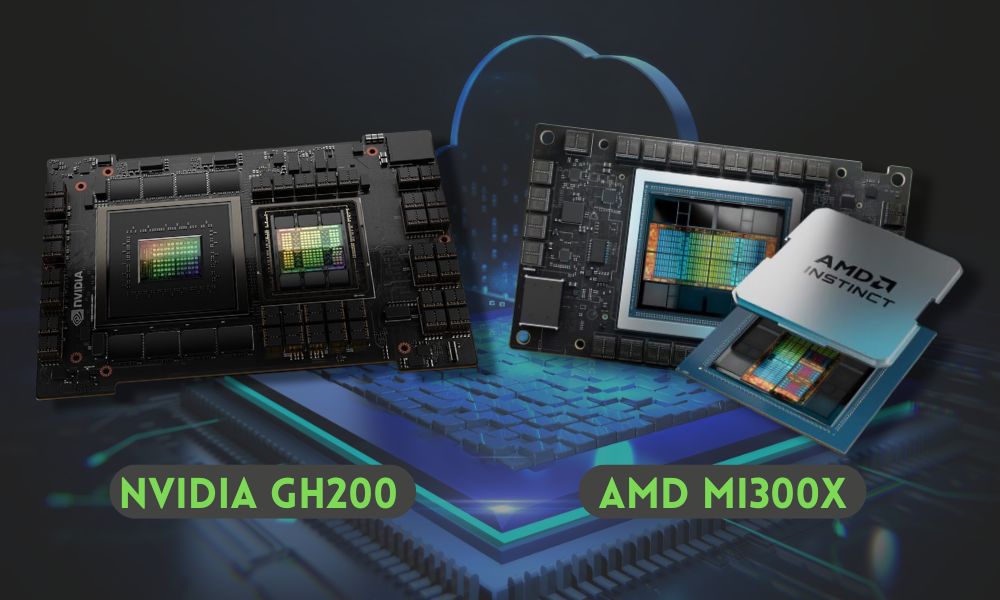

When it comes to high-performance GPUs, the NVIDIA GH200 and AMD MI300x are two of the most advanced options on the market. The development of these GPUs represents the latest advancements in their respective companies’ technological journeys. NVIDIA’s GH200 combines the innovative “Grace” CPU architecture with the powerful “Hopper” GPU architecture, delivering a unique blend of processing capabilities. On the other hand, AMD’s MI300x leverages state-of-the-art die stacking and chiplet technology in a multi-chip architecture, enabling dense compute and high-bandwidth memory integration.

Both GPUs are designed to handle the most demanding AI and HPC workloads, offering impressive specifications and capabilities. But how do they compare against each other?

We’ll dive into the specifics of each GPU. Whether you’re a tech enthusiast or a data center professional, this comparison will help you understand which GPU might best suit your needs.

Technical Specifications

Before diving into the specifics, it’s essential to understand the technical specifications that define these GPUs. Both the GH200 and MI300x are built to handle complex computations, but they achieve this through different architectural designs and technological innovations.

Architecture and Design

The NVIDIA GH200 features a combination of the “Grace” CPU architecture and the “Hopper” GPU architecture. This integration allows the GH200 to provide a balanced mix of CPU and GPU capabilities, making it suitable for a wide range of AI and HPC applications. The architecture is designed to optimize data movement and processing efficiency, resulting in a higher performance and energy-efficient solution.

AMD’s MI300x utilizes a multi-chip architecture with advanced die stacking and chiplet technology. This design enables dense compute capabilities and high-bandwidth memory integration, making it ideal for handling large language models and complex AI workloads. The architecture also includes the AMD Infinity Cache, which enhances performance by reducing latency and increasing bandwidth between compute units.

Processing Power

The GH200 is equipped with a powerful combination of CPU and GPU cores, delivering robust processing capabilities. According to the standard HPCG memory bandwidth benchmark, the GH200 Grace CPU achieved a solid 41.7 GFLOPS. In the NWChem benchmark, it secured second place with a time of 1403.5 seconds, showcasing its impressive computational power.

The MI300x, on the other hand, is recognized for its exceptional performance, boasting a score of 379,660 points in Geekbench 6.3.0’s GPU-focused OpenCL benchmark, making it the fastest GPU on the Geekbench browser to date. This high core count and clock speed enable the MI300x to handle intensive AI workloads and complex computations with ease.

Memory and Bandwidth

The NVIDIA GH200 Grace Hopper Superchip features 96GB of HBM3 memory, delivering an impressive 4TB/s of memory bandwidth. This substantial memory capacity and bandwidth are crucial for supporting large datasets and ensuring efficient data processing, making the GH200 an excellent choice for high-performance computing and AI applications.

AMD Instinct MI300X accelerators are equipped with a massive 192GB of HBM3 memory, supported by 5.3TB/s of peak local bandwidth. Additionally, the MI300x includes a large internally shared 256MB AMD Infinity Cache, which enhances performance by providing high-speed data access. This combination of memory capacity and bandwidth allows the MI300x to handle larger models and more complex workloads efficiently.

Performance Metrics

Evaluating performance metrics is important to understanding the capabilities of GPUs like the NVIDIA GH200 and AMD MI300x. These metrics provide insights into how each GPU handles real-world applications, gaming, machine learning, and AI workloads.

Real-world Performance

While the GH200 is designed for high-performance computing and AI workloads, its architecture is not optimized for gaming. For gaming purposes, the NVIDIA RTX 4090 would be a more appropriate choice due to its better driver support and gaming optimizations. The GH200 excels in applications that require substantial computational power, such as scientific simulations and data analytics, rather than gaming.

Similar to the GH200, the MI300x is not tailored for gaming. Instead, AMD hardware consumer GPUs, like the Radeon RX series (e.g., RX 7900 XT), offer better driver support and optimizations for gaming. The MI300x shines in professional and scientific applications where its advanced multi-chip architecture and high-bandwidth memory can be fully utilized. For everyday gaming and general applications, the RX series would be a better fit.

Machine Learning and AI Capabilities

The NVIDIA DGX GH200 represents a new class of AI supercomputer. This system is specifically designed for large language models (LLMs) and the most demanding multimodality workloads. By combining the Grace CPU and Hopper GPU into a single, tightly integrated package, NVIDIA has created a CPU+GPU superchip that delivers exceptional performance and efficiency. The GH200 excels in machine learning and AI workloads, providing the necessary power and scalability for cutting-edge AI research and development.

The MI300x’s architecture and memory specifications make it highly suitable for intricate AI workloads. With its substantial 192GB HBM3 memory and peak local bandwidth of 5.3TB/s, the MI300x can efficiently handle large AI models and complex computations. Integrations are already underway in Microsoft Azure, where environments have been optimized to leverage the MI300x. This allows customers to experiment and deploy AI models with greater efficiency and flexibility, showcasing the MI300x’s capabilities in real-world AI applications.

Software and Compatibility

NVIDIA provides robust driver support and frequent updates for the GH200, ensuring optimal performance and stability. This strong support is vital in professional environments where reliability is paramount. AMD’s MI300x also benefits from solid driver support, particularly in high-performance computing and AI workloads. Both companies prioritize regular updates to improve performance, fix bugs, and introduce new features, with NVIDIA having a particularly strong track record.

In addition, NVIDIA’s comprehensive software ecosystem, including CUDA, cuDNN, and TensorRT, is widely used in AI and machine learning applications, making it a strong choice for developers. AMD’s MI300x is supported by ROCm, which provides a competitive platform for AI and HPC applications. Both ecosystems are designed to maximize the capabilities of their respective hardware, ensuring efficient performance and broad compatibility.

Use Cases

Understanding the use cases for the NVIDIA GH200 and AMD MI300x can help determine which GPU is best suited for specific professional and enterprise needs. Both GPUs offer unique strengths in different areas, making them valuable for various applications.

Professional Applications

The NVIDIA GH200 excels in professional settings such as CAD and video editing. Its combination of the Grace CPU and Hopper GPU architecture provides powerful computational capabilities, making it ideal for tasks that require high precision and substantial processing power. Professionals in fields like design, engineering, and media production can benefit significantly from the GH200’s advanced features and robust performance.

On the other hand, the MI300x also performs exceptionally well in professional environments, leveraging its multi-chip architecture and high-bandwidth memory. This makes it suitable for intensive workloads in industries like scientific research, financial modeling, and large-scale simulations.

Data Center and Enterprise Use

In data centers, the NVIDIA DGX GH200 stands out as the only AI supercomputer offering a massive shared memory space across interconnected Grace Hopper Superchips. This feature provides developers with more memory to build and deploy giant models, enhancing the capabilities of data centers to handle large-scale AI and high-performance computing tasks. The GH200’s architecture ensures efficient data processing and movement, making it a top choice for enterprises focusing on AI innovation.

Meanwhile, the AMD MI300x offers raw acceleration power with eight GPUs per node on a standard platform. The MI300x aims to improve data center efficiencies, tackle budget and sustainability concerns, and provide a highly programmable GPU software platform. This makes it an excellent option for enterprises looking to enhance their data center capabilities with a focus on performance, cost-effectiveness, and sustainability.

Market Position and Pricing

The AMD MI300x is strategically priced between $10,000 and $20,000, making it a competitive option in the high-performance GPU market. In contrast, the NVIDIA GH200 is significantly more expensive, with prices ranging from $30,000 to $40,000 depending on the model and configuration. This higher price point for the GH200 is largely due to its superior performance in AI and machine learning tasks, coupled with the high demand for NVIDIA’s cutting-edge GPU technology in data centers and AI applications.

When evaluating the cost-benefit ratio, the AMD MI300x offers a more budget-friendly option while still delivering robust performance for a variety of professional and data center applications. Its advanced multi-chip architecture and high-bandwidth memory provide substantial computational power, making it an excellent choice for enterprises looking to enhance efficiency without a significant financial outlay.

On the other hand, the NVIDIA GH200 justifies its higher price with exceptional performance in the most demanding AI and HPC workloads. Its combination of the Grace CPU and Hopper GPU architecture, along with extensive driver support and a comprehensive software ecosystem, offers unparalleled capabilities for developing and deploying large-scale AI models.

Future Outlook

Both NVIDIA and AMD are set to introduce new features and improvements to their GPUs. NVIDIA plans to enhance the GH200 with advancements in AI processing, increased memory capacity, and improved energy efficiency. These upgrades aim to maintain NVIDIA’s leadership in the AI and HPC markets, catering to the growing demands of data centers and enterprises.

Industry trends show a rising focus on AI and high-performance computing, with GPUs becoming essential for advanced AI applications like generative AI and large language models. AMD’s MI300x, with its architecture and high-bandwidth memory, is well-suited to capitalize on these trends. AMD’s emphasis on sustainability and cost-effective solutions aligns with industry needs, especially for data centers balancing performance with environmental and budgetary concerns.

Choosing the Right GPU for Your Needs

In the comparison between the NVIDIA GH200 and AMD MI300x, each GPU offers distinct advantages tailored to different professional and enterprise applications. The GH200 excels in AI performance and machine learning with its advanced architecture and superior performance, justifying its higher price. Meanwhile, the MI300x provides a cost-effective solution with robust capabilities for high-performance computing and professional use.

As technology continues to evolve, both GPUs are poised to meet the growing demands of AI and HPC markets. Ultimately, the choice depends on your specific needs and budget, but both NVIDIA and AMD deliver impressive solutions that can drive innovation and efficiency in various fields.