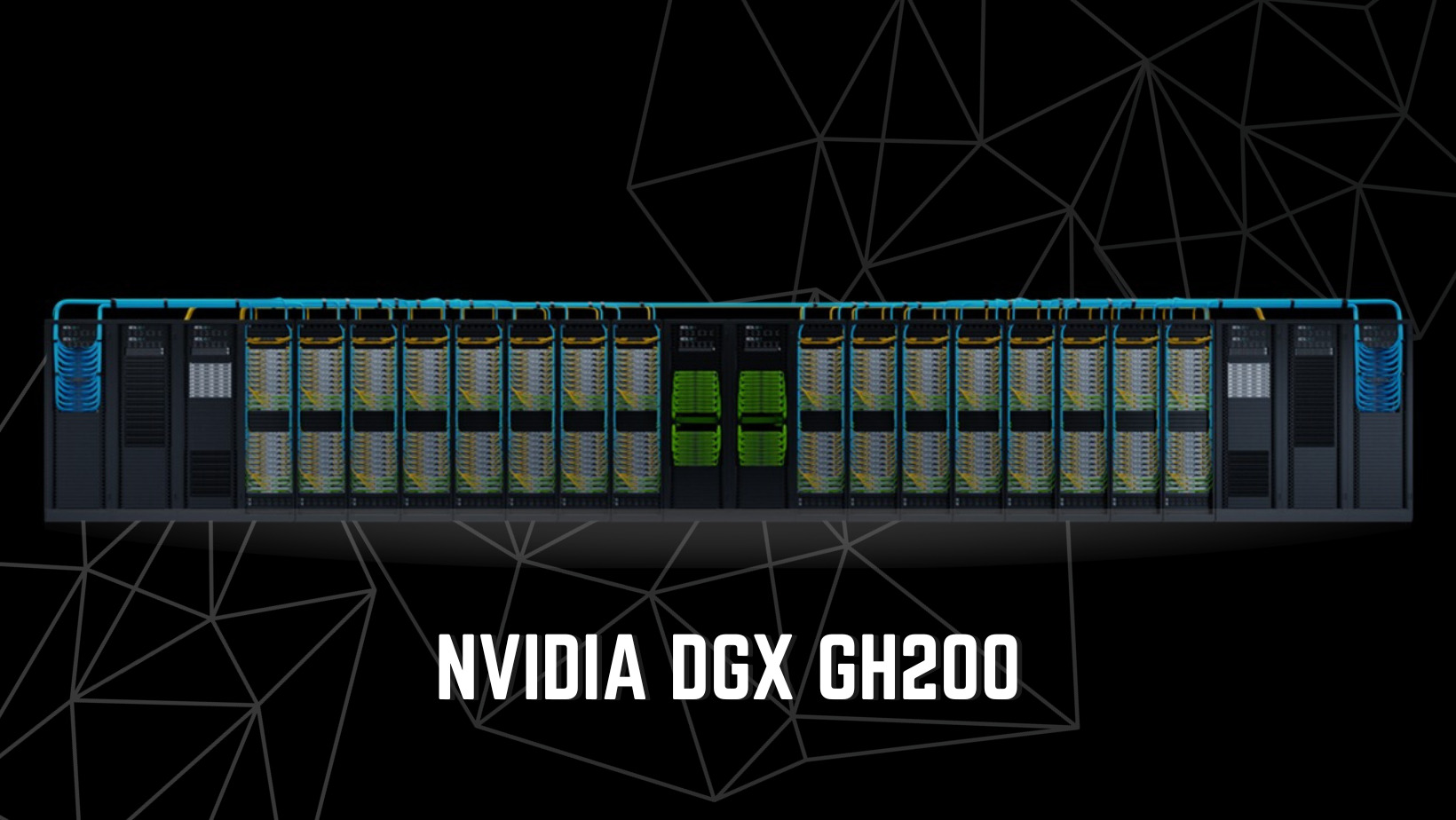

In the ever-evolving landscape of graphics processing units (GPUs), Nvidia has consistently been at the forefront, setting industry standards with its cutting-edge technology. Among their illustrious lineup, the Nvidia DGX series has garnered attention from professionals and tech enthusiasts alike. In this comprehensive comparison, we delve deep into two of Nvidia’s flagship GPUs: the Nvidia GH200 and the Nvidia H100. Whether you’re a seasoned data scientist, a game developer, or a tech enthusiast looking for the best in performance, this article is here to help you make an informed decision when choosing between these two powerful GPUs.

Key Differences: Unraveling the Distinctions

Several key differences can significantly impact your choice when comparing the Nvidia GH200 and the Nvidia H100. Let’s break down these differences to give you a clearer picture of what sets these GPUs apart.

Processor

At the heart of every GPU lies its processor, a fundamental determinant of performance. Here, the DGX GH200 adopts the formidable Hopper GPU architecture, celebrated for its exceptional computational prowess and AI-centric capabilities. In contrast, the DGX H100 relies on the Ampere GPU architecture, renowned for its all-around excellence. The choice between these architectures hinges on your specific requirements, with Hopper excelling in raw computing power and AI tasks and Ampere offering a well-rounded performance profile.

Memory

Memory capacity plays a vital role in handling data-intensive tasks. The DGX GH200 shines with a colossal 1TB of High Bandwidth Memory 3 (HBM3), making it an ideal choice for projects demanding vast datasets and complex simulations. Meanwhile, the DGX H100 offers 800GB of High Bandwidth Memory 2e (HBM2e), a substantial figure that falls short of the GH200’s capacity. Your selection should align with the scale of your projects and the volume of data you need to process efficiently.

Connectivity

In the realm of high-performance GPUs, connectivity is paramount. The DGX GH200 introduces the cutting-edge NVLink 4 interconnect, boasting improved bandwidth and communication capabilities compared to its predecessor. Meanwhile, the DGX H100 employs the NVLink 3 interconnect, a robust choice that lags behind the speed and efficiency of NVLink 4. Your decision here can influence data transfer rates and overall system performance, making it a crucial consideration for demanding workloads.

Other Features:

Beyond the core specifications, additional features set the DGX GH200 apart. It boasts a new Arm CPU, a sophisticated software stack tailored for modern workloads, and an innovative cooling system to monitor temperatures during intensive tasks. While not available on the DGX H100, these features can significantly enhance the GPU’s overall performance and efficiency. If your work demands these advanced capabilities, the GH200 emerges as the clear choice.

Use Cases: Elevating Your Possibilities

To truly appreciate the strengths of the Nvidia GH200 and H100, it’s vital to consider the specific use cases where each GPU shines. These GPUs cater to various applications, making them versatile choices for various industries and tasks. Here are some key areas where these GPUs excel:

Artificial Intelligence (AI)

Both the Nvidia GH200 and H100 are well-suited for AI applications. The GH200, with its Hopper architecture, is particularly adept at handling deep learning tasks thanks to its powerful AI capabilities. The H100, while not far behind, offers a well-rounded AI performance suitable for a wide array of AI applications, including computer vision and natural language processing.

Machine Learning

Machine learning tasks often require significant computational power and memory capacity. The GH200’s HBM3 memory and computational prowess make it a top choice for machine learning practitioners dealing with large datasets and complex models. The H100, with its Ampere architecture, remains a formidable contender for machine learning but may be more cost-effective for specific applications.

Data Science

Data scientists rely on GPUs for rapid data analysis and model training. The GH200’s massive memory and advanced architecture make it a robust choice for data scientists handling big data projects. The H100, though slightly less equipped in terms of memory, offers excellent data manipulation and analysis performance.

High-Performance Computing (HPC)

When it comes to high-performance computing, both GPUs shine brightly. The GH200’s NVLink 4 interconnect and exceptional processing power make it an excellent choice for HPC tasks that demand fast data transfer and intense calculations. The H100, with its impressive Ampere architecture, is also well-suited for HPC workloads and offers a competitive alternative.

Quantum Computing

Quantum computing simulations require substantial computational resources. The GH200’s raw power and memory capacity make it a valuable asset for researchers and organizations exploring the quantum realm. The H100, while formidable, may be a more cost-effective choice for quantum computing simulations, depending on specific project requirements.

Visualization

Both GPUs deliver impressive performance for professionals in fields like 3D rendering, animation, and scientific visualization. The GH200’s advanced architecture and expansive memory support intricate visualizations and simulations. The H100 offers a cost-effective solution for visualization tasks that may not require the GH200’s top-tier capabilities.

Performance: Setting New Benchmarks

Performance is a critical factor when choosing a GPU. Here’s a closer look at their performance metrics:

Computational Performance

The DGX GH200 boasts up to 2 times the FP32 performance and a remarkable three times the FP64 performance of the DGX H100. This makes it a clear choice for applications that demand immense computational power, such as complex simulations and scientific computing.

Handling Large Datasets and Complex Models

In addition to superior computational performance, the GH200 can handle larger datasets and more complex machine-learning models than the H100. Its massive 1TB of HBM3 memory provides ample data storage and manipulation space, enabling data scientists and researchers to work with extensive datasets and intricate deep-learning models efficiently.

Pricing and Availability: What to Expect

When deciding between the Nvidia GH200 and H100, pricing and availability are essential factors to consider.

The DGX GH200 is highly anticipated and is expected to hit the market in early 2024. Currently, Nvidia has yet to announce the pricing for this powerhouse GPU officially. While the absence of pricing details might leave some uncertainty, it’s often a sign of a premium product that aims to set a new standard in the industry. If you’re looking for cutting-edge technology and are willing to invest in the latest advancements, the GH200 is worth keeping an eye on.

On the other hand, the DGX H100 is readily available for purchase. Nvidia has priced the H100 starting at $199,999. While it might seem like a substantial investment, it’s important to consider the H100’s capabilities and how they align with your specific needs. The H100 offers a robust performance package for various applications and, as of now, represents a more accessible option for those who require high-performance computing today.

Summary

The Nvidia DGX GH200 represents the next evolution of supercomputers. With substantial performance enhancements compared to its predecessor, the DGX H100, the GH200 emerges as the ultimate choice for various demanding use cases. Its availability in early 2024 is a highly anticipated milestone for professionals and enthusiasts alike.

Whether delving into artificial intelligence, machine learning, data science, high-performance computing, quantum computing, or visualization, the DGX GH200 promises to deliver the unparalleled power and capabilities needed to take your projects to the next level. Stay tuned for the arrival of this groundbreaking GPU, and be prepared to embrace a new era of computational excellence.